JokerGAN: Memory-Efficient Model for Handwritten Text Generation with Text Line Awareness

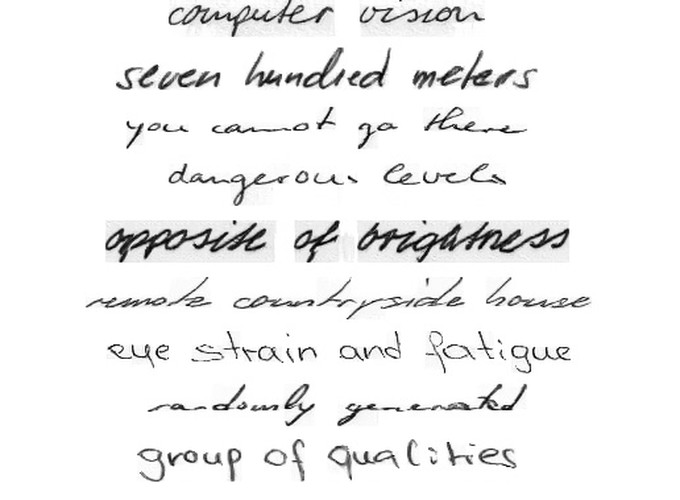

Examples of handwritten text images generated by our proposed model.

Examples of handwritten text images generated by our proposed model.

JokerGAN: Memory-Efficient Model for Handwritten Text Generation with Text Line Awareness

Abstract

Collecting labeled data for training of models for image recognition problems, including handwritten text recognition (HTR), is a tedious and expensive task. Recent work on handwritten text generation shows that generative models can be used as a data augmentation method to improve the performance of HTR systems. We propose a new method for handwritten text generation that uses generative adversarial networks with multi-class conditional batch normalization, which enables us to use character sequences with variable lengths as conditional input. Compared to existing methods, it has significantly lower memory requirements which are almost constant regardless of the size of the character set. This allows us to train a generative model for languages with a large number of characters, such as Japanese. We also introduce an additional condition that makes the generator aware of vertical properties of the characters in the generated sequence, which helps generate text with well-aligned characters in the text line. Experiments on handwritten text datasets show that our proposed model can be used to boost the performance of HTR, particularly when we only have access to partially annotated data and train our generative model in a semi-supervised fashion. The results also show that our model outperforms the current state-of-the-art for handwritten text generation. In addition, we perform a human evaluation study that indicates that the proposed method generates handwritten text images that look more realistic and natural.